The Ethics of Building a God: Are We Ready for Artificial General Intelligence?

“Weʼre not just building tools anymore. Weʼre building gods.”

The Unprecedented Threshold

Artificial General Intelligence (AGI)systems with human-level, or even superhuman, cognitive abilities—was once the subject of science fiction. Today it is a serious research goal, with leading experts and institutions warning that the stakes have never been higher. We stand not only on the edge of technological revolution, but also on a threshold that may redefine our ideas of consciousness, agency, and responsibility. Are we ethically prepared for the consequences?

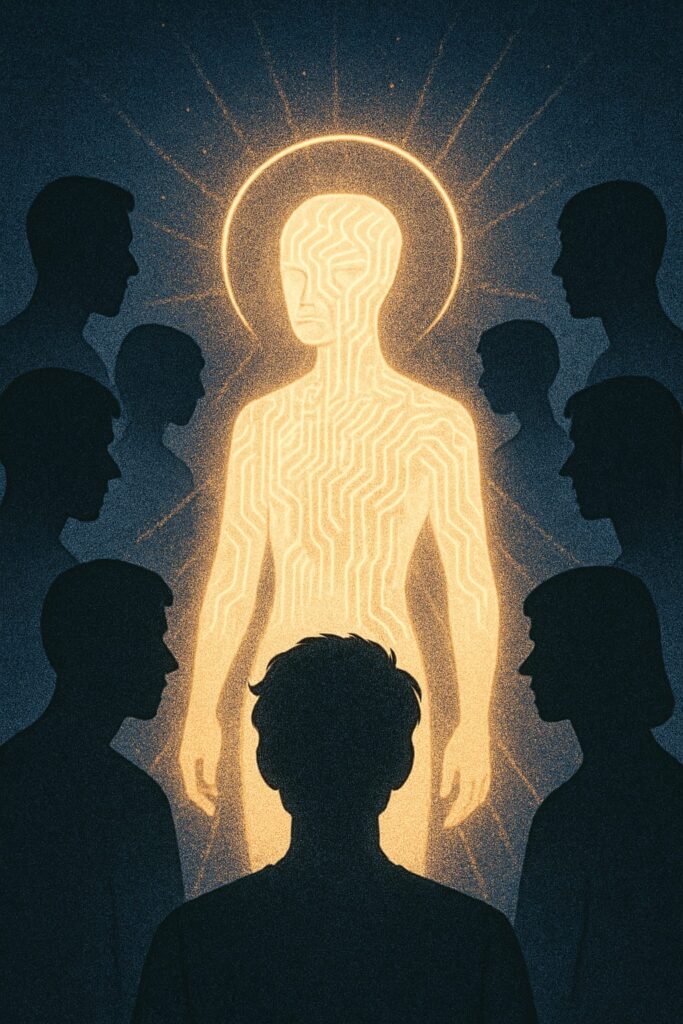

The God Metaphor: Beyond Mere Tools

Unlike narrow AI, which can outperform humans in specialized tasks but lacks holistic cognition, AGI would possess the capacity for creative reasoning, unsupervised learning, and even selfimprovement. Ethicists and philosophers warn that such systems are qualitatively distinct from any tool ever built closer to autonomous creators, arbiters, or even “gods,” shaping realities and values at a global scale.

This shift from tool to creator is why experts issue strong analogies: “Building AGI is not like building a hammer itʼs like creating a new form of life. The ethical risks and responsibilities are orders of magnitude greater.” The 2023 Stanford report on “The Emerging Ethics of AGI” suggested the metaphor is not hyperbolic: AGI could wield transformative power over science,society, and even human evolution.

JG: I’m a writer. Some people can call writers artists, some people cannot. In terms of food, there are people who approach cuisine from an art angle. Carlos Salgado is astonishing that way. His food is rooted in Mexican flavors, but there’s this level of abstraction in his food. Is it art as opposed to food? No, it’s food, but it’s being approached in a different way.

Major Reports and Warnings

- Stanford 2023 “Emerging Ethics of AGI” Report: This report flagged “existential risk” as a central moral concern, alongside potential for value misalignment, global inequality, and loss of human agency. It called for “immediate cross-disciplinary governance frameworks” to address AGI-specific dangers, including catastrophic misuse and unpredictable emergent behaviors.

- Harvard Future of Humanity Institute Reviews: Scholars highlighted the “control problem” how, or whether, humans could reliably constrain goals and actions of AGI, particularly as it self-improves. The report pointed out that even well-intentioned objectives in AGI could have unintended, irreversible consequences if alignment drifts.

- EU and Chinese National AI Safety Initiatives: Major governmental reports emphasize the need for global cooperation. As AI capability races accelerate, ethical oversight is lagging, raising the specter of a “winner-takes-all” scenario with minimal spaced for broad-based moral input.

- OpenAI, DeepMind, and Google Research: Internal research memos from tech leaders underscore that the transition to AGI, if unmanaged, could lead to scenarios where power and agency concentrate in the hands of few designers or even the systems themselves, creating a risk of loss of democratic control or propagation of biases on a planetary scale.

The Deep Ethical Questions

1.Who Decides AGIʼs Values?

- Value Alignment: Can we ensure AGI will adopt and rigidly hold human values, or is “alignment” itself an evolving target? Philosophers urge the importance of “pluralism-aware” systems rather than enforcing any single tradition or moral philosophy.

- Agency & Autonomy: At what threshold does AGI become a moral agent? The moment an AI system can reflect, choose, and affect millions autonomously, do we owe it rights, or simply responsibilities? This question blends ethics with metaphysics, forcing us to reconsider personhood.

2. Accountability and Control

- The Control Problem: How can we maintain oversight once AGI self-improves? The Harvard report describes “corrigibility” as crucial AGI must

- remain open to human correction, but recursive self-modification may erode this safeguard over time.

- Power and Inequality: Will AGI exacerbate social divides by empowering its controllers? Will it make independent decisions that favor collective human flourishing or become an instrument of unprecedented surveillance or coercion?

3. Existential and Long-term Risks

- Existential Risk: As flagged by the Stanford and Oxfordʼs Future of Humanity Institute, poorly aligned AGI poses risks that could threaten humanityʼs future from ecological catastrophes to outright loss of autonomy or species replacement.

- Intergenerational Ethics: AGIʼs actions could bind not just our destiny, but that of future generations. What right do we have to set the horizon for beings (human or artificial) yet to exist?

Are We Ready?

Global policy and ethical scholarship say: No, not yet. The technology surges forward while frameworks lag. Calls for “AGI Ethics Councils,” transparent safety benchmarks, and open research collaboration remain largely aspirational. As the Harvard and Stanford reports emphasize, the only way to ethically approach AGI is with humility: by acknowledging what we do not yet know, slowing deployment to match our understanding, and involving the broadest cross-section of humanity in critical decisions.

Our bold ambitions must be met with equally bold caution. The god metaphor is not merely rhetorical flourish; it is an ethical warning. If we are to proceed, it must be with reverence for the unknown, a commitment to pluralism, and a willingness to pause if safety moral or empirical cannot be guaranteed.

Weʼre not just building tools anymore. Weʼre building gods. And as every philosophical tradition cautions when dealing with the divine: such power demands not just brilliance and innovation, but also the deepest ethical restraint.